python3中urllib模块使用笔记

urllib模块属于python Internet模块中比较常用的模块,主要是用于HTTP协议和FTP协议,其他常见的internet模块还有httplib、xmllib、ftplib等很多。是我们做网络爬虫首选工具。在python2中,有两个:urllib和urllib2,到了python3中,两者合并为urllib,并且很多的方法都发生了变化。

1、urllib.request.Request(url, data=None, headers={}, origin_req_host=None, unverifiable=False, method=None) # 包装请求内容

参数释义

url:目标网址

data:Post提交的数据,根据官方文档说明,这个参数默认为None,当不为空时,使用POST替代GET方法发送数据给服务器。

headers:参数值应为一个字典,由于许多服务器禁止脚本访问,只支持普通的浏览器访问,所以要通过headers的值来模拟浏览器访问。

origin_req_hos和unverifiable是用于正确处理cookie的,不做深入研究,可参考官方文档:

origin_req_host should be the request-host of the origin transaction, as defined by RFC 2965. It defaults to http.cookiejar.request_host(self). This is the host name or IP address of the original request that was initiated by the user. For example, if the request is for an image in an HTML document, this should be the request-host of the request for the page containing the image.

unverifiable should indicate whether the request is unverifiable, as defined by RFC 2965. It defaults to False. An unverifiable request is one whose URL the user did not have the option to approve. For example, if the request is for an image in an HTML document, and the user had no option to approve the automatic fetching of the image, this should be true.

method是指明请求方法的字段。官方文档:

method should be a string that indicates the HTTP request method that will be used (e.g. 'HEAD'). If provided, its value is stored in the method attribute and is used by get_method(). Subclasses may indicate a default method by setting the method attribute in the class itself.

单独看这个命令的演示没有实际意义,请结合urlopen一起看。

2、urllib.request.urlopen(req) # 获取指定页面,返回bytes类型,需要用decode('utf-8')解码,转换为str类型

from urllib import request

url = 'http://www.lagou.com/zhaopin/Python/?labelWords=label'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/45.0.2454.85 Safari/537.36 115Browser/6.0.3',

'Referer': 'http://www.lagou.com/zhaopin/Python/?labelWords=label',

'Connection': 'keep-alive'

}

req = request.Request(url, headers=headers) # 包装好头部信息

page = request.urlopen(req).read() # 打开url,并使用read方法读取内容

page = page.decode('utf-8') # 将返回的内容(bytes)转换为人类阅读的utf-8格式

print(page) # 打印结果

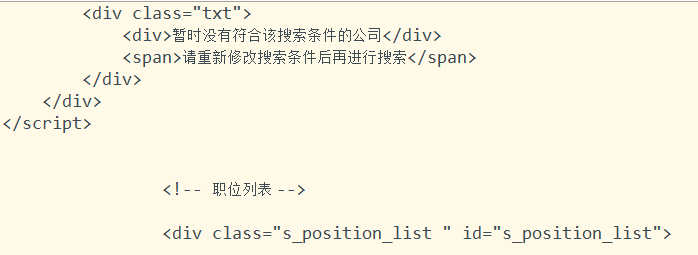

运行的效果:

3、urllib.parse URL解析组件

该模块的主要作用是将各种URL转换为标准的URL字符串。

包含urlparse和quote两大类。

暂时只说一下我遇到的quote大类中的urlencode。

这个urlencode作用是将请求的数据(一般为字典)转换为ascii格式,这样会避免报错。

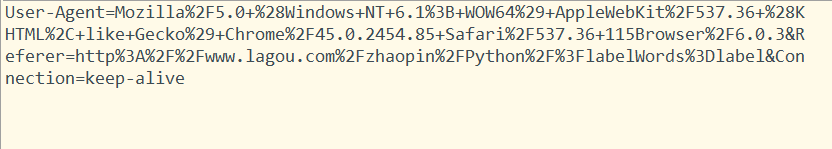

from urllib import parse

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/45.0.2454.85 Safari/537.36 115Browser/6.0.3',

'Referer': 'http://www.lagou.com/zhaopin/Python/?labelWords=label',

'Connection': 'keep-alive'

}

h = parse.urlencode(headers)

print(h)

运行结果: